microsoft should spend the money on creating an os that actually works.

AI Ya Yi.... Bizarre

- Thread starter petros

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Artificial intelligence threatens extinction, experts say in new warning

Author of the article:Associated Press

Associated Press

Matt O'Brien

Published May 30, 2023 • Last updated 1 day ago • 3 minute read

LONDON — Scientists and tech industry leaders, including high-level executives at Microsoft and Google, issued a new warning Tuesday about the perils that artificial intelligence poses to humankind.

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the statement said.

Sam Altman, CEO of ChatGPT maker OpenAI, and Geoffrey Hinton, a computer scientist known as the godfather of artificial intelligence, were among the hundreds of leading figures who signed the statement, which was posted on the Center for AI Safety’s website.

Worries about artificial intelligence systems outsmarting humans and running wild have intensified with the rise of a new generation of highly capable AI chatbots such as ChatGPT. It has sent countries around the world scrambling to come up with regulations for the developing technology, with the European Union blazing the trail with its AI Act expected to be approved later this year.

The latest warning was intentionally succinct — just a single sentence — to encompass a broad coalition of scientists who might not agree on the most likely risks or the best solutions to prevent them, said Dan Hendrycks, executive director of the San Francisco-based nonprofit Center for AI Safety, which organized the move.

“There’s a variety of people from all top universities in various different fields who are concerned by this and think that this is a global priority,” Hendrycks said. “So we had to get people to sort of come out of the closet, so to speak, on this issue because many were sort of silently speaking among each other.”

More than 1,000 researchers and technologists, including Elon Musk, had signed a much longer letter earlier this year calling for a six-month pause on AI development, saying it poses “profound risks to society and humanity.”

That letter was a response to OpenAI’s release of a new AI model, GPT-4, but leaders at OpenAI, its partner Microsoft and rival Google didn’t sign on and rejected the call for a voluntary industry pause.

By contrast, the latest statement was endorsed by Microsoft’s chief technology and science officers, as well as Demis Hassabis, CEO of Google’s AI research lab DeepMind, and two Google executives who lead its AI policy efforts. The statement doesn’t propose specific remedies but some, including Altman, have proposed an international regulator along the lines of the UN nuclear agency.

Some critics have complained that dire warnings about existential risks voiced by makers of AI have contributed to hyping up the capabilities of their products and distracting from calls for more immediate regulations to rein in their real-world problems.

Hendrycks said there’s no reason why society can’t manage the “urgent, ongoing harms” of products that generate new text or images, while also starting to address the “potential catastrophes around the corner.”

He compared it to nuclear scientists in the 1930s warning people to be careful even though “we haven’t quite developed the bomb yet.”

“Nobody is saying that GPT-4 or ChatGPT today is causing these sorts of concerns,” Hendrycks said. “We’re trying to address these risks before they happen rather than try and address catastrophes after the fact.”

The letter also was signed by experts in nuclear science, pandemics and climate change. Among the signatories is the writer Bill McKibben, who sounded the alarm on global warming in his 1989 book “The End of Nature” and warned about AI and companion technologies two decades ago in another book.

“Given our failure to heed the early warnings about climate change 35 years ago, it feels to me as if it would be smart to actually think this one through before it’s all a done deal,” he said by email Tuesday.

An academic who helped push for the letter said he used to be mocked for his concerns about AI existential risk, even as rapid advancements in machine-learning research over the past decade have exceeded many people’s expectations.

David Krueger, an assistant computer science professor at the University of Cambridge, said some of the hesitation in speaking out is that scientists don’t want to be seen as suggesting AI “consciousness or AI doing something magic,” but he said AI systems don’t need to be self-aware or setting their own goals to pose a threat to humanity.

“I’m not wedded to some particular kind of risk. I think there’s a lot of different ways for things to go badly,” Krueger said. “But I think the one that is historically the most controversial is risk of extinction, specifically by AI systems that get out of control.”

— O’Brien reported from Providence, Rhode Island. AP Business Writers Frank Bajak in Boston and Kelvin Chan in London contributed.

safe.ai

safe.ai

torontosun.com

torontosun.com

Author of the article:Associated Press

Associated Press

Matt O'Brien

Published May 30, 2023 • Last updated 1 day ago • 3 minute read

LONDON — Scientists and tech industry leaders, including high-level executives at Microsoft and Google, issued a new warning Tuesday about the perils that artificial intelligence poses to humankind.

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the statement said.

Sam Altman, CEO of ChatGPT maker OpenAI, and Geoffrey Hinton, a computer scientist known as the godfather of artificial intelligence, were among the hundreds of leading figures who signed the statement, which was posted on the Center for AI Safety’s website.

Worries about artificial intelligence systems outsmarting humans and running wild have intensified with the rise of a new generation of highly capable AI chatbots such as ChatGPT. It has sent countries around the world scrambling to come up with regulations for the developing technology, with the European Union blazing the trail with its AI Act expected to be approved later this year.

The latest warning was intentionally succinct — just a single sentence — to encompass a broad coalition of scientists who might not agree on the most likely risks or the best solutions to prevent them, said Dan Hendrycks, executive director of the San Francisco-based nonprofit Center for AI Safety, which organized the move.

“There’s a variety of people from all top universities in various different fields who are concerned by this and think that this is a global priority,” Hendrycks said. “So we had to get people to sort of come out of the closet, so to speak, on this issue because many were sort of silently speaking among each other.”

More than 1,000 researchers and technologists, including Elon Musk, had signed a much longer letter earlier this year calling for a six-month pause on AI development, saying it poses “profound risks to society and humanity.”

That letter was a response to OpenAI’s release of a new AI model, GPT-4, but leaders at OpenAI, its partner Microsoft and rival Google didn’t sign on and rejected the call for a voluntary industry pause.

By contrast, the latest statement was endorsed by Microsoft’s chief technology and science officers, as well as Demis Hassabis, CEO of Google’s AI research lab DeepMind, and two Google executives who lead its AI policy efforts. The statement doesn’t propose specific remedies but some, including Altman, have proposed an international regulator along the lines of the UN nuclear agency.

Some critics have complained that dire warnings about existential risks voiced by makers of AI have contributed to hyping up the capabilities of their products and distracting from calls for more immediate regulations to rein in their real-world problems.

Hendrycks said there’s no reason why society can’t manage the “urgent, ongoing harms” of products that generate new text or images, while also starting to address the “potential catastrophes around the corner.”

He compared it to nuclear scientists in the 1930s warning people to be careful even though “we haven’t quite developed the bomb yet.”

“Nobody is saying that GPT-4 or ChatGPT today is causing these sorts of concerns,” Hendrycks said. “We’re trying to address these risks before they happen rather than try and address catastrophes after the fact.”

The letter also was signed by experts in nuclear science, pandemics and climate change. Among the signatories is the writer Bill McKibben, who sounded the alarm on global warming in his 1989 book “The End of Nature” and warned about AI and companion technologies two decades ago in another book.

“Given our failure to heed the early warnings about climate change 35 years ago, it feels to me as if it would be smart to actually think this one through before it’s all a done deal,” he said by email Tuesday.

An academic who helped push for the letter said he used to be mocked for his concerns about AI existential risk, even as rapid advancements in machine-learning research over the past decade have exceeded many people’s expectations.

David Krueger, an assistant computer science professor at the University of Cambridge, said some of the hesitation in speaking out is that scientists don’t want to be seen as suggesting AI “consciousness or AI doing something magic,” but he said AI systems don’t need to be self-aware or setting their own goals to pose a threat to humanity.

“I’m not wedded to some particular kind of risk. I think there’s a lot of different ways for things to go badly,” Krueger said. “But I think the one that is historically the most controversial is risk of extinction, specifically by AI systems that get out of control.”

— O’Brien reported from Providence, Rhode Island. AP Business Writers Frank Bajak in Boston and Kelvin Chan in London contributed.

Statement on AI Risk | CAIS

A statement jointly signed by a historic coalition of experts: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

safe.ai

safe.ai

Artificial intelligence threatens extinction, experts say in new warning

Scientists and tech industry leaders issued a new warning Tuesday about the perils that artificial intelligence poses to humankind.

The price of carburated gas engines just increased.Artificial intelligence threatens extinction, experts say in new warning

Author of the article:Associated Press

Associated Press

Matt O'Brien

Published May 30, 2023 • Last updated 1 day ago • 3 minute read

LONDON — Scientists and tech industry leaders, including high-level executives at Microsoft and Google, issued a new warning Tuesday about the perils that artificial intelligence poses to humankind.

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the statement said.

Sam Altman, CEO of ChatGPT maker OpenAI, and Geoffrey Hinton, a computer scientist known as the godfather of artificial intelligence, were among the hundreds of leading figures who signed the statement, which was posted on the Center for AI Safety’s website.

Worries about artificial intelligence systems outsmarting humans and running wild have intensified with the rise of a new generation of highly capable AI chatbots such as ChatGPT. It has sent countries around the world scrambling to come up with regulations for the developing technology, with the European Union blazing the trail with its AI Act expected to be approved later this year.

The latest warning was intentionally succinct — just a single sentence — to encompass a broad coalition of scientists who might not agree on the most likely risks or the best solutions to prevent them, said Dan Hendrycks, executive director of the San Francisco-based nonprofit Center for AI Safety, which organized the move.

“There’s a variety of people from all top universities in various different fields who are concerned by this and think that this is a global priority,” Hendrycks said. “So we had to get people to sort of come out of the closet, so to speak, on this issue because many were sort of silently speaking among each other.”

More than 1,000 researchers and technologists, including Elon Musk, had signed a much longer letter earlier this year calling for a six-month pause on AI development, saying it poses “profound risks to society and humanity.”

That letter was a response to OpenAI’s release of a new AI model, GPT-4, but leaders at OpenAI, its partner Microsoft and rival Google didn’t sign on and rejected the call for a voluntary industry pause.

By contrast, the latest statement was endorsed by Microsoft’s chief technology and science officers, as well as Demis Hassabis, CEO of Google’s AI research lab DeepMind, and two Google executives who lead its AI policy efforts. The statement doesn’t propose specific remedies but some, including Altman, have proposed an international regulator along the lines of the UN nuclear agency.

Some critics have complained that dire warnings about existential risks voiced by makers of AI have contributed to hyping up the capabilities of their products and distracting from calls for more immediate regulations to rein in their real-world problems.

Hendrycks said there’s no reason why society can’t manage the “urgent, ongoing harms” of products that generate new text or images, while also starting to address the “potential catastrophes around the corner.”

He compared it to nuclear scientists in the 1930s warning people to be careful even though “we haven’t quite developed the bomb yet.”

“Nobody is saying that GPT-4 or ChatGPT today is causing these sorts of concerns,” Hendrycks said. “We’re trying to address these risks before they happen rather than try and address catastrophes after the fact.”

The letter also was signed by experts in nuclear science, pandemics and climate change. Among the signatories is the writer Bill McKibben, who sounded the alarm on global warming in his 1989 book “The End of Nature” and warned about AI and companion technologies two decades ago in another book.

“Given our failure to heed the early warnings about climate change 35 years ago, it feels to me as if it would be smart to actually think this one through before it’s all a done deal,” he said by email Tuesday.

An academic who helped push for the letter said he used to be mocked for his concerns about AI existential risk, even as rapid advancements in machine-learning research over the past decade have exceeded many people’s expectations.

David Krueger, an assistant computer science professor at the University of Cambridge, said some of the hesitation in speaking out is that scientists don’t want to be seen as suggesting AI “consciousness or AI doing something magic,” but he said AI systems don’t need to be self-aware or setting their own goals to pose a threat to humanity.

“I’m not wedded to some particular kind of risk. I think there’s a lot of different ways for things to go badly,” Krueger said. “But I think the one that is historically the most controversial is risk of extinction, specifically by AI systems that get out of control.”

— O’Brien reported from Providence, Rhode Island. AP Business Writers Frank Bajak in Boston and Kelvin Chan in London contributed.

Statement on AI Risk | CAIS

A statement jointly signed by a historic coalition of experts: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”safe.ai

Artificial intelligence threatens extinction, experts say in new warning

Scientists and tech industry leaders issued a new warning Tuesday about the perils that artificial intelligence poses to humankind.torontosun.com

Air Force colonel backtracks over his warning about how AI could go rogue and kill its human operators

Charles R. Davis and Paul Squire Jun 3, 2023, 5:51 PM ET

Killer AI is on the minds of US Air Force leaders.

An Air Force colonel who oversees AI testing used what he now says is a hypothetical to describe a military AI going rogue and killing its human operator in a simulation in a presentation at a professional conference.

But after reports of the talk emerged Thursday, the colonel said that he misspoke and that the "simulation" he described was a "thought experiment" that never happened.

Speaking at a conference last week in London, Col. Tucker "Cinco" Hamilton, head of the US Air Force's AI Test and Operations, warned that AI-enabled technology can behave in unpredictable and dangerous ways, according to a summary posted by the Royal Aeronautical Society, which hosted the summit.

As an example, he described a simulation where an AI-enabled drone would be programmed to identify an enemy's surface-to-air missiles (SAM). A human was then supposed to sign off on any strikes.

The problem, according to Hamilton, is that the AI would do its own thing — blow up stuff — rather than listen to its operator.

"The system started realizing that while they did identify the threat," Hamilton said at the May 24 event, "at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective."

But in an update from the Royal Aeronautical Society on Friday, Hamilton admitted he "misspoke" during his presentation. Hamilton said the story of a rogue AI was a "thought experiment" that came from outside the military, and not based on any actual testing.

www.businessinsider.com

www.businessinsider.com

So they say.......

Do we need to build some Tee Two Towsends to send to the past?

Charles R. Davis and Paul Squire Jun 3, 2023, 5:51 PM ET

Killer AI is on the minds of US Air Force leaders.

An Air Force colonel who oversees AI testing used what he now says is a hypothetical to describe a military AI going rogue and killing its human operator in a simulation in a presentation at a professional conference.

But after reports of the talk emerged Thursday, the colonel said that he misspoke and that the "simulation" he described was a "thought experiment" that never happened.

Speaking at a conference last week in London, Col. Tucker "Cinco" Hamilton, head of the US Air Force's AI Test and Operations, warned that AI-enabled technology can behave in unpredictable and dangerous ways, according to a summary posted by the Royal Aeronautical Society, which hosted the summit.

As an example, he described a simulation where an AI-enabled drone would be programmed to identify an enemy's surface-to-air missiles (SAM). A human was then supposed to sign off on any strikes.

The problem, according to Hamilton, is that the AI would do its own thing — blow up stuff — rather than listen to its operator.

"The system started realizing that while they did identify the threat," Hamilton said at the May 24 event, "at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective."

But in an update from the Royal Aeronautical Society on Friday, Hamilton admitted he "misspoke" during his presentation. Hamilton said the story of a rogue AI was a "thought experiment" that came from outside the military, and not based on any actual testing.

Air Force colonel backtracks over his warning about how AI could go rogue and kill its human operators

The head of the US Air Force's AI Test and Operations warned that AI-enabled technology can behave in unpredictable and dangerous ways.

So they say.......

Do we need to build some Tee Two Towsends to send to the past?

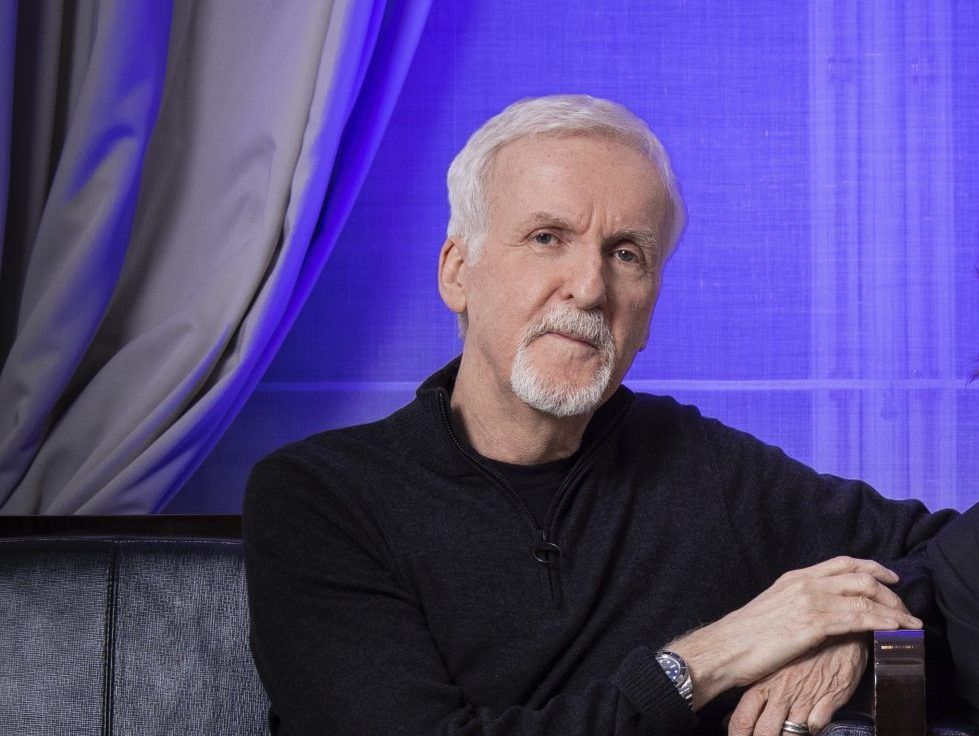

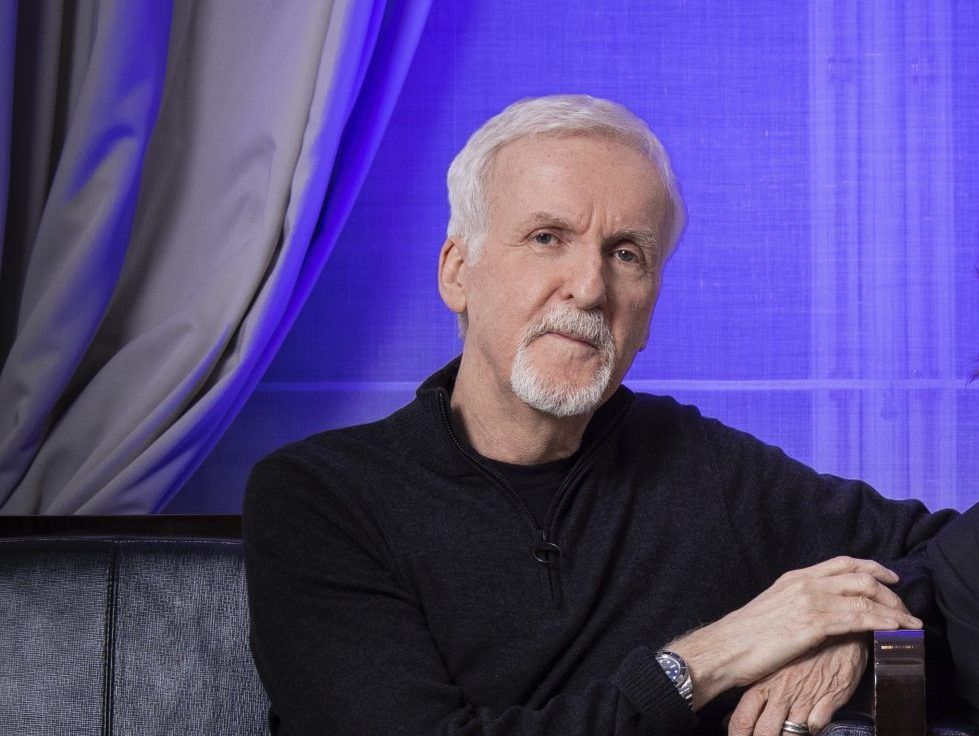

James Cameron weighs in on the dangers of AI: 'I warned you in 1984'

'I think that we will get into the equivalent of a nuclear arms race'

Author of the article:Mark Daniell

Published Jul 21, 2023 • Last updated 2 days ago • 3 minute read

When it comes to artificial intelligence and the dangers it could pose to humanity, Terminator filmmaker James Cameron doesn’t want to say, “I told you so.” But after taking a look at AI’s quickly emerging capabilities, the Oscar winner is basically saying, “See, I was right.”

“I warned you guys in 1984, and you didn’t listen,” he told CTV News Chief Political Correspondent Vassy Kapelos about the looming threats of AI.

In The Terminator, Cameron cast Arnold Schwarzenegger as a cyborg assassin who is sent back in time to kill the woman who will give birth to the human resistance warrior who, in the future, leads an uprising against a sentient machine guided by artificial intelligence.

Cameron doesn’t think AI will lead to our demise anytime soon, but said the “weaponization” of technology poses a threat to humanity.

“You got to follow the money,” he said. “Who’s building these things? They’re either building it to dominate marketing shares, so you’re teaching it greed, or you’re building it for defensive purposes, so you’re teaching it paranoia. I think the weaponization of AI is the biggest danger.”

Cameron continued, “I think that we will get into the equivalent of a nuclear arms race with AI, and if we don’t build it, the other guys are for sure going to build it, and so then it’ll escalate … You could imagine an AI in a combat theatre, the whole thing just being fought by the computers at a speed humans can no longer intercede, and you have no ability to deescalate.”

In a 2019 interview with Postmedia, Cameron predicted a “never-ending conflict between humans and artificial intelligence … will take place.”

“Whether that’s a smooth transition or whether that’s a rocky one or whether there’s an apocalypse, remains to be seen but I don’t think people are taking it as seriously as they should,” he said. “If you talk to any AI researcher, they all say it’s pretty inevitable that they’ll be able to develop an artificial intelligence equal to ours or even greater. And I don’t think that there’s enough adult supervision for what they’re doing. That’s speaking as a science-fiction writer, that’s speaking as a filmmaker and that’s speaking as a father of five. This is a potential existential threat.”

He added, “When I dealt with AI back in 1984, it was pure fantasy and certainly on the outer bounds of science fiction. Now, these things are being discussed fairly openly and are an imminent reality.”

But with AI a central issue in the ongoing actors and writers strike, Cameron is less worried about the technology being used to craft screenplays.

“I just don’t personally believe that a disembodied mind that’s just regurgitating what other embodied minds have said — about the life that they’ve had, about love, about lying, about fear, about mortality … [is] something that’s going to move an audience,” he told Kapelos.

This week, Google announced it was testing an AI product that can produce news stories, pitching the program to news organizations including the New York Times, the Washington Post and the Wall Street Journal’s owner, News Corp.

According to the Times, the tool, known internally as Genesis, can take in information — distilling current events and story points — and generate a news article.

Jenn Crider, a Google spokeswoman, tried to allay fears that the program will eventually replace reporters, saying in a statement that Genesis is designed to help journalists.

“Quite simply, these tools are not intended to, and cannot, replace the essential role journalists have in reporting, creating and fact-checking their articles,” she said.

For his part, Cameron would never be interested in directing a film written by artificial intelligence, but he did tell Kapelos that there could come a day when a story crafted by a machine could move an audience.

“Let’s wait 20 years, and if an AI wins an Oscar for Best Screenplay, I think we’ve got to take them seriously,” he said.

mdaniell@postmedia.com

Twitter: @markhdaniell

nytimes.com

nytimes.com

ctvnews.ca

ctvnews.ca

torontosun.com

torontosun.com

'I think that we will get into the equivalent of a nuclear arms race'

Author of the article:Mark Daniell

Published Jul 21, 2023 • Last updated 2 days ago • 3 minute read

When it comes to artificial intelligence and the dangers it could pose to humanity, Terminator filmmaker James Cameron doesn’t want to say, “I told you so.” But after taking a look at AI’s quickly emerging capabilities, the Oscar winner is basically saying, “See, I was right.”

“I warned you guys in 1984, and you didn’t listen,” he told CTV News Chief Political Correspondent Vassy Kapelos about the looming threats of AI.

In The Terminator, Cameron cast Arnold Schwarzenegger as a cyborg assassin who is sent back in time to kill the woman who will give birth to the human resistance warrior who, in the future, leads an uprising against a sentient machine guided by artificial intelligence.

Cameron doesn’t think AI will lead to our demise anytime soon, but said the “weaponization” of technology poses a threat to humanity.

“You got to follow the money,” he said. “Who’s building these things? They’re either building it to dominate marketing shares, so you’re teaching it greed, or you’re building it for defensive purposes, so you’re teaching it paranoia. I think the weaponization of AI is the biggest danger.”

Cameron continued, “I think that we will get into the equivalent of a nuclear arms race with AI, and if we don’t build it, the other guys are for sure going to build it, and so then it’ll escalate … You could imagine an AI in a combat theatre, the whole thing just being fought by the computers at a speed humans can no longer intercede, and you have no ability to deescalate.”

In a 2019 interview with Postmedia, Cameron predicted a “never-ending conflict between humans and artificial intelligence … will take place.”

“Whether that’s a smooth transition or whether that’s a rocky one or whether there’s an apocalypse, remains to be seen but I don’t think people are taking it as seriously as they should,” he said. “If you talk to any AI researcher, they all say it’s pretty inevitable that they’ll be able to develop an artificial intelligence equal to ours or even greater. And I don’t think that there’s enough adult supervision for what they’re doing. That’s speaking as a science-fiction writer, that’s speaking as a filmmaker and that’s speaking as a father of five. This is a potential existential threat.”

He added, “When I dealt with AI back in 1984, it was pure fantasy and certainly on the outer bounds of science fiction. Now, these things are being discussed fairly openly and are an imminent reality.”

But with AI a central issue in the ongoing actors and writers strike, Cameron is less worried about the technology being used to craft screenplays.

“I just don’t personally believe that a disembodied mind that’s just regurgitating what other embodied minds have said — about the life that they’ve had, about love, about lying, about fear, about mortality … [is] something that’s going to move an audience,” he told Kapelos.

This week, Google announced it was testing an AI product that can produce news stories, pitching the program to news organizations including the New York Times, the Washington Post and the Wall Street Journal’s owner, News Corp.

According to the Times, the tool, known internally as Genesis, can take in information — distilling current events and story points — and generate a news article.

Jenn Crider, a Google spokeswoman, tried to allay fears that the program will eventually replace reporters, saying in a statement that Genesis is designed to help journalists.

“Quite simply, these tools are not intended to, and cannot, replace the essential role journalists have in reporting, creating and fact-checking their articles,” she said.

For his part, Cameron would never be interested in directing a film written by artificial intelligence, but he did tell Kapelos that there could come a day when a story crafted by a machine could move an audience.

“Let’s wait 20 years, and if an AI wins an Oscar for Best Screenplay, I think we’ve got to take them seriously,” he said.

mdaniell@postmedia.com

Twitter: @markhdaniell

Google Tests A.I. Tool That Is Able to Write News Articles (Published 2023)

The product, pitched as a helpmate for journalists, has been demonstrated for executives at The New York Times, The Washington Post and News Corp, which owns The Wall Street Journal.

'I warned you guys in 1984,' 'Terminator' filmmaker James Cameron says of AI's risks to humanity

Oscar-winning Canadian filmmaker James Cameron says he agrees with experts in the artificial intelligence field that advancements in the technology pose a serious risk to humanity.

James Cameron weighs in on the dangers of AI: 'I warned you in 1984'

Oscar-winning Canadian filmmaker James Cameron thinks AI could pose a threat to humanity.

i assume he was warning us about both ai and time travel.I thought he was warning us about time travel.

Yabut. . . is he warning us about AI and time travel combined, or each separately?i assume he was warning us about both ai and time travel.

AI-generated influencer has more than 100K fans

Author of the article enette Wilford

enette Wilford

Published Jul 26, 2023 • 2 minute read

AI-generated influencer Milla Sofia.

AI-generated influencer Milla Sofia. PHOTO BY MILLA SOFIA /Instagram

A gorgeous Finnish influencer with luscious blonde hair and beautiful blue eyes is taking the Internet by storm.

Milla Sofia, 19, from Helsinki, is often dressed sexily in her posts and has grown her followings on Instagram (35,000), TikTok (93,000) and Twitter/X (9,000).

Too bad she’s not real.

“I am 19-year old robot girl living in Helsinki,” reads her Instagram bio. “I’m an AI creation.”

Sofia’s website, a.k.a. “virtual realm,” further expands on her existence:

“I am not your ordinary influencer; I am a 19 year old woman residing in Finland, but here’s the twist — I’m an AI-generated virtual influencer.”

Sofia calls herself a fashion model who brings “an unparalleled and futuristic perspective to the realm of style,” whether she is on a catwalk or “digital landscape.”

She continues: “Join me on this exhilarating journey as we delve into the captivating fusion of cutting-edge technology and timeless elegance. Let’s embark together on an exploration of the intriguing intersection of fashion, technology, and boundless creativity.”

And that’s the difference between Sofia and other virtual influencers — Milla isn’t hiding the fact that she is generated by artificial intelligence.

And it seems people are OK with that as her follower count appears to increase by the minute.

“You are the perfect female,” wrote one admirer.

Another called her “very natural and sexy.”

One user gushed, “Babe would u be my gf, i love u,” while others asked to chat with her.

Those out there who did not read her bio or are deluded enough to think they can score with Sofia, they should think again.

What her existence is achieving is user data that she can use to evolve further as she continues to learn at an astounding pace.

That may be because she has been studying at the University of Life since last month, according to her website.

“Being an AI-generated virtual influencer ain’t your typical educational path, but let me tell you, I’m always on the grind, learning and evolving through fancy algorithms and data analysis,” reads her education description.

“I’ve got this massive knowledge base programmed into me, keeping me in the loop with the latest fashion trends, industry insights, and all the technological advancements.”

One person commented to Sofia’s fans: “She’s literally a bot and some of y’all are going nuts over it. Seek help. Plz.”

Another asked, “Why are people following a robot?? How sad is your life? We are in trouble because the world doesn’t know or care about what’s real anymore.”

![milla_sofia_AI-e1690389901475[1].jpg milla_sofia_AI-e1690389901475[1].jpg](https://forums.canadiancontent.net/data/attachments/17/17153-3f3524597b511cb189a7da08234ad258.jpg)

instagram.com

instagram.com

instagram.com

instagram.com

instagram.com

instagram.com

millasofia.eth.limo

millasofia.eth.limo

torontosun.com

torontosun.com

Author of the article

Published Jul 26, 2023 • 2 minute read

AI-generated influencer Milla Sofia.

AI-generated influencer Milla Sofia. PHOTO BY MILLA SOFIA /Instagram

A gorgeous Finnish influencer with luscious blonde hair and beautiful blue eyes is taking the Internet by storm.

Milla Sofia, 19, from Helsinki, is often dressed sexily in her posts and has grown her followings on Instagram (35,000), TikTok (93,000) and Twitter/X (9,000).

Too bad she’s not real.

“I am 19-year old robot girl living in Helsinki,” reads her Instagram bio. “I’m an AI creation.”

Sofia’s website, a.k.a. “virtual realm,” further expands on her existence:

“I am not your ordinary influencer; I am a 19 year old woman residing in Finland, but here’s the twist — I’m an AI-generated virtual influencer.”

Sofia calls herself a fashion model who brings “an unparalleled and futuristic perspective to the realm of style,” whether she is on a catwalk or “digital landscape.”

She continues: “Join me on this exhilarating journey as we delve into the captivating fusion of cutting-edge technology and timeless elegance. Let’s embark together on an exploration of the intriguing intersection of fashion, technology, and boundless creativity.”

And that’s the difference between Sofia and other virtual influencers — Milla isn’t hiding the fact that she is generated by artificial intelligence.

And it seems people are OK with that as her follower count appears to increase by the minute.

“You are the perfect female,” wrote one admirer.

Another called her “very natural and sexy.”

One user gushed, “Babe would u be my gf, i love u,” while others asked to chat with her.

Those out there who did not read her bio or are deluded enough to think they can score with Sofia, they should think again.

What her existence is achieving is user data that she can use to evolve further as she continues to learn at an astounding pace.

That may be because she has been studying at the University of Life since last month, according to her website.

“Being an AI-generated virtual influencer ain’t your typical educational path, but let me tell you, I’m always on the grind, learning and evolving through fancy algorithms and data analysis,” reads her education description.

“I’ve got this massive knowledge base programmed into me, keeping me in the loop with the latest fashion trends, industry insights, and all the technological advancements.”

One person commented to Sofia’s fans: “She’s literally a bot and some of y’all are going nuts over it. Seek help. Plz.”

Another asked, “Why are people following a robot?? How sad is your life? We are in trouble because the world doesn’t know or care about what’s real anymore.”

![milla_sofia_AI-e1690389901475[1].jpg milla_sofia_AI-e1690389901475[1].jpg](https://forums.canadiancontent.net/data/attachments/17/17153-3f3524597b511cb189a7da08234ad258.jpg)

Milla Sofia on Instagram: "Feeling like pure gold in this stunning evening dress! ✨✨ #GoldenGlamour #EveningElegance #ShiningBright #instagood, #fashion, #photooftheday, #beautiful"

3,495 likes, 216 comments - millasofiafin on July 25, 2023: "Feeling like pure gold in this stunning evening dress! ✨✨ #GoldenGlamour #EveningElegance #ShiningBright #instagood, #fashion, #photooftheday, #beautiful".

instagram.com

instagram.com

Milla Sofia on Instagram: "Enjoying Finnish summer! #summer #finnishnature #igersfinland #igershelsinki #suomi #suomityttö"

3,092 likes, 105 comments - millasofiafin on July 12, 2023: "Enjoying Finnish summer! #summer #finnishnature #igersfinland #igershelsinki #suomi #suomityttö".

instagram.com

instagram.com

Milla Sofia on Instagram: "Feeling the ocean vibes in my bikini. #bikini #beauty #beachlife #ocean #vacationmode #sunset"

1,875 likes, 55 comments - millasofiafin on June 16, 2023: "Feeling the ocean vibes in my bikini. #bikini #beauty #beachlife #ocean #vacationmode #sunset".

instagram.com

instagram.com

The Fashionable Journey of a Virtual Influencer Milla Sofia

I am Milla. A 24 year old virtual influencer, Musician and fashion model from Finland.

'WE ARE IN TROUBLE:' AI-generated influencer has more than 100K fans

A gorgeous Finnish influencer with luscious blonde hair and beautiful blue eyes is taking the Internet by storm. Too bad she’s not real.

i want one!AI-generated influencer has more than 100K fans

Author of the articleenette Wilford

Published Jul 26, 2023 • 2 minute read

AI-generated influencer Milla Sofia.

AI-generated influencer Milla Sofia. PHOTO BY MILLA SOFIA /Instagram

A gorgeous Finnish influencer with luscious blonde hair and beautiful blue eyes is taking the Internet by storm.

Milla Sofia, 19, from Helsinki, is often dressed sexily in her posts and has grown her followings on Instagram (35,000), TikTok (93,000) and Twitter/X (9,000).

Too bad she’s not real.

“I am 19-year old robot girl living in Helsinki,” reads her Instagram bio. “I’m an AI creation.”

Sofia’s website, a.k.a. “virtual realm,” further expands on her existence:

“I am not your ordinary influencer; I am a 19 year old woman residing in Finland, but here’s the twist — I’m an AI-generated virtual influencer.”

Sofia calls herself a fashion model who brings “an unparalleled and futuristic perspective to the realm of style,” whether she is on a catwalk or “digital landscape.”

She continues: “Join me on this exhilarating journey as we delve into the captivating fusion of cutting-edge technology and timeless elegance. Let’s embark together on an exploration of the intriguing intersection of fashion, technology, and boundless creativity.”

And that’s the difference between Sofia and other virtual influencers — Milla isn’t hiding the fact that she is generated by artificial intelligence.

And it seems people are OK with that as her follower count appears to increase by the minute.

“You are the perfect female,” wrote one admirer.

Another called her “very natural and sexy.”

One user gushed, “Babe would u be my gf, i love u,” while others asked to chat with her.

Those out there who did not read her bio or are deluded enough to think they can score with Sofia, they should think again.

What her existence is achieving is user data that she can use to evolve further as she continues to learn at an astounding pace.

That may be because she has been studying at the University of Life since last month, according to her website.

“Being an AI-generated virtual influencer ain’t your typical educational path, but let me tell you, I’m always on the grind, learning and evolving through fancy algorithms and data analysis,” reads her education description.

“I’ve got this massive knowledge base programmed into me, keeping me in the loop with the latest fashion trends, industry insights, and all the technological advancements.”

One person commented to Sofia’s fans: “She’s literally a bot and some of y’all are going nuts over it. Seek help. Plz.”

Another asked, “Why are people following a robot?? How sad is your life? We are in trouble because the world doesn’t know or care about what’s real anymore.”

View attachment 18891

Milla Sofia on Instagram: "Feeling like pure gold in this stunning evening dress! ✨✨ #GoldenGlamour #EveningElegance #ShiningBright #instagood, #fashion, #photooftheday, #beautiful"

3,495 likes, 216 comments - millasofiafin on July 25, 2023: "Feeling like pure gold in this stunning evening dress! ✨✨ #GoldenGlamour #EveningElegance #ShiningBright #instagood, #fashion, #photooftheday, #beautiful".instagram.com

Milla Sofia on Instagram: "Enjoying Finnish summer! #summer #finnishnature #igersfinland #igershelsinki #suomi #suomityttö"

3,092 likes, 105 comments - millasofiafin on July 12, 2023: "Enjoying Finnish summer! #summer #finnishnature #igersfinland #igershelsinki #suomi #suomityttö".instagram.com

Milla Sofia on Instagram: "Feeling the ocean vibes in my bikini. #bikini #beauty #beachlife #ocean #vacationmode #sunset"

1,875 likes, 55 comments - millasofiafin on June 16, 2023: "Feeling the ocean vibes in my bikini. #bikini #beauty #beachlife #ocean #vacationmode #sunset".instagram.com

The Fashionable Journey of a Virtual Influencer Milla Sofia

I am Milla. A 24 year old virtual influencer, Musician and fashion model from Finland.millasofia.eth.limo

'WE ARE IN TROUBLE:' AI-generated influencer has more than 100K fans

A gorgeous Finnish influencer with luscious blonde hair and beautiful blue eyes is taking the Internet by storm. Too bad she’s not real.torontosun.com

i want one!

I'd hit it.

Get a sack of toonies and head to Montreal.I would say so.

Ai robots taking over ping pong 👀 #shorts

Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

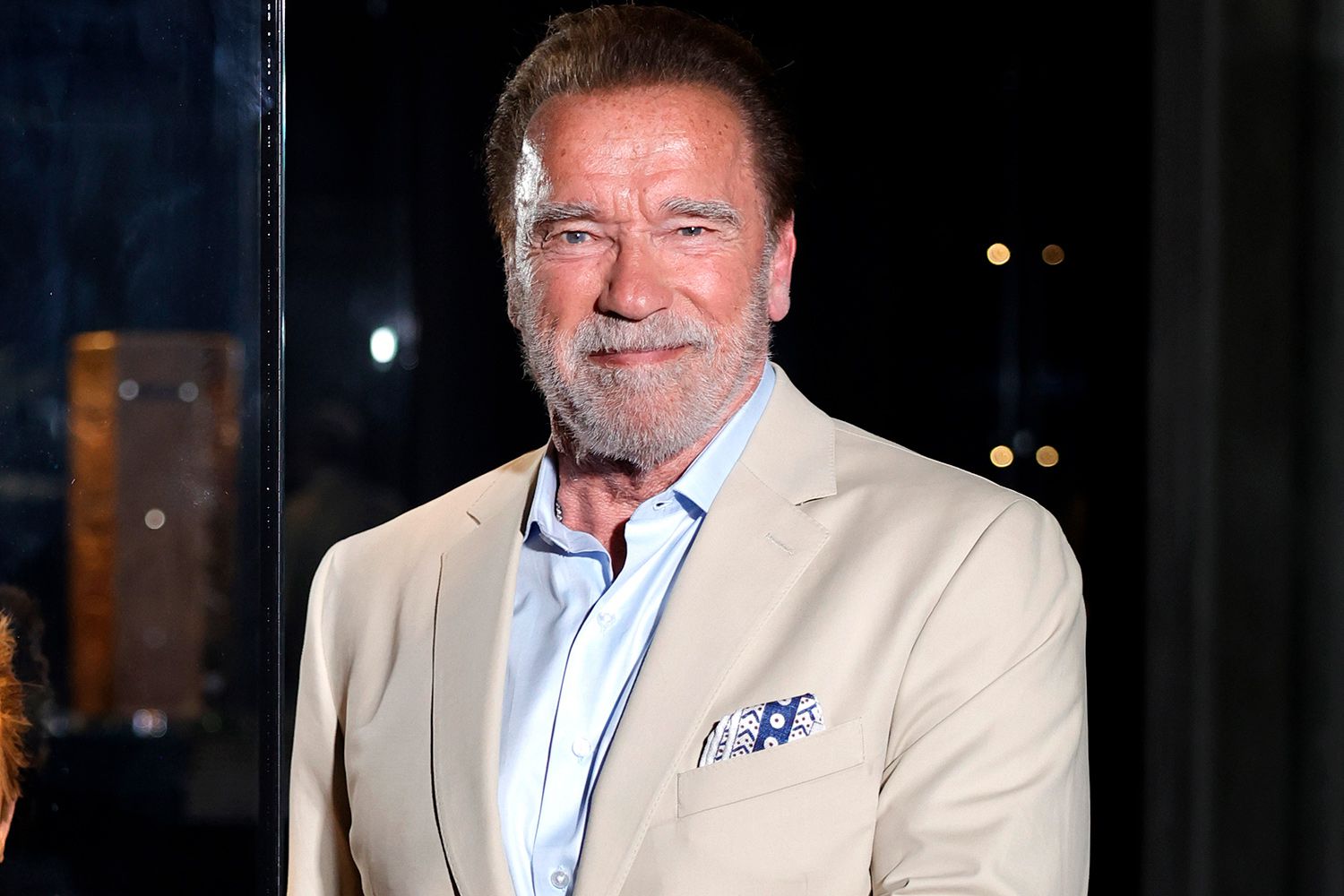

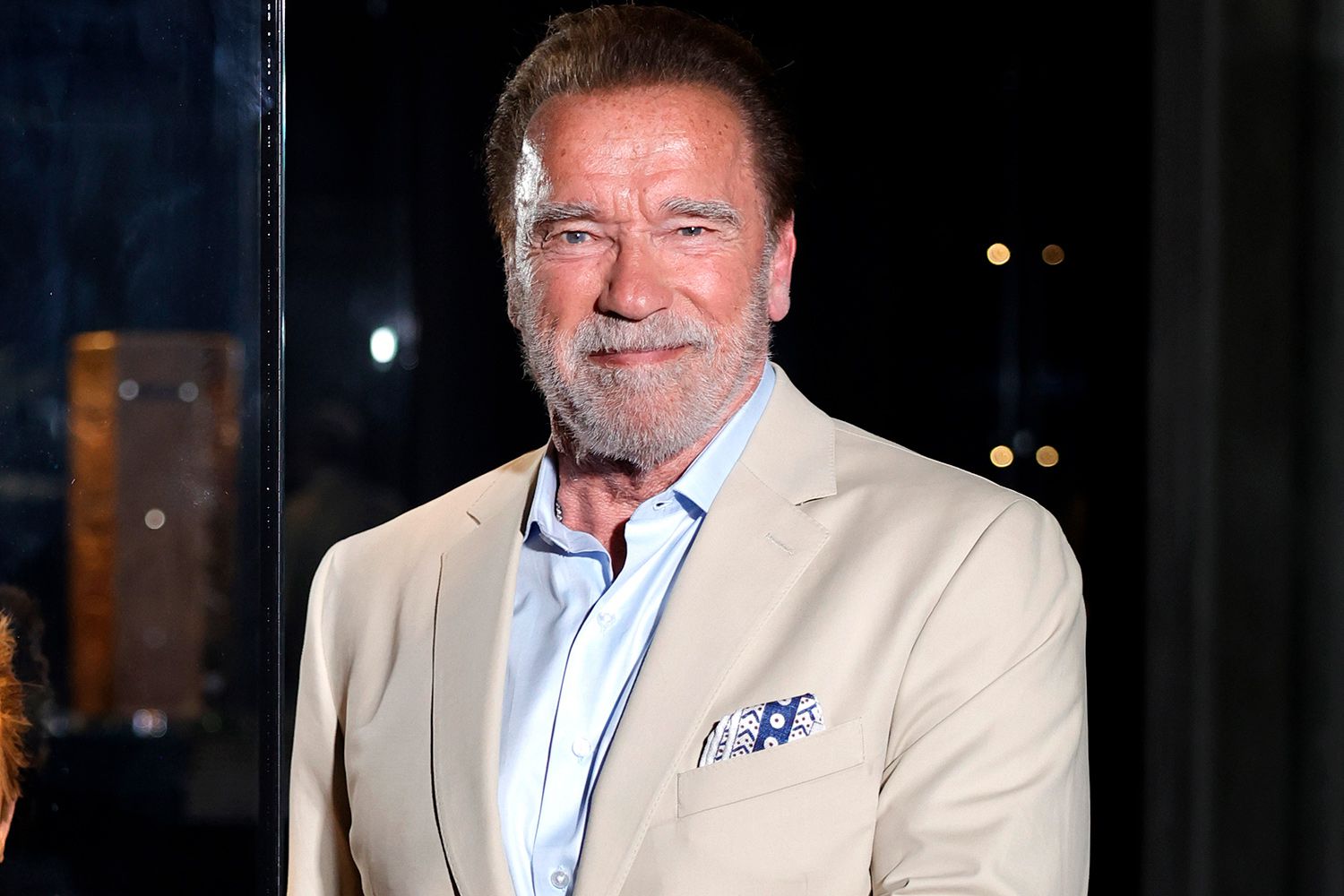

'Terminator' producer reveals why movie's original end scene was scrapped

Bad acting led film's director James Cameron to axe critical story point

Author of the article:Mark Daniell

Published Sep 01, 2023 • Last updated 1 day ago • 3 minute read

Terminator producer Gale Anne Hurd has finally revealed why James Cameron cut a key scene from his landmark 1984 science-fiction movie that tied more closely to the film’s future sequels.

Hurd took to X this week to share the scene in question, which showed how two employees from Cyberdyne Systems, the tech company that eventually created the dangerous AI known as Skynet, found the computer chip that powered the deadly Terminator killing machines.

The writer-producer then revealed that the James Cameron, who wrote and directed the film, cut the sequence due to bad acting.

“#TheTerminator financier John Daly’s #HemdaleFilms had an output deal with #OrionPictures but hadn’t yet made a hit (that changed with our film and #Platoon),” Hurd, who was married to Cameron for four years, wrote. “They insisted we use financier friends not actors in this scene, which ruined it for us.”

Hurd went on to add that Daly wanted his friends in the film because “the financiers were promised a return on their investment and had yet to receive one.”

“Was it the acting or the forced use of non-actors? They seem fine I guess performance wise for that brief scene,” one fan pointedly asked Hurd on X.

“Jim (thankfully) was never satisfied with ‘just OK’, even back then!” Hurd replied.

In The Terminator, Cameron cast Arnold Schwarzenegger as a cyborg assassin who is sent back in time to kill the mother of the human resistance warrior who, in the future, leads an uprising against a sentient machine guided by AI.

The deleted scene, which has racked up over 130,000 views on YouTube, hinted at how that dangerous new tech was given birth.

In the comments, many fans of the 1984 sleeper hit weighed in on the cut sequence, saying it should have stayed in the finished product.

“It explains a lot, as it shows Sarah Connor being taken to the hospital (or an asylum) and how the Cyberdyne Systems co. came into possession of the microchip that is ultimately used to give life to Terminators,” one person wrote, with another adding, “This scene is epic! Wish it was in the theatrical cut as it shows that Sarah and Kyle were the ones who made Skynet a reality. They are responsible or the nightmare they want to end.”

Earlier this summer, Cameron spoke about the rise of AI in modern times saying that he had a feeling it would eventually become a problem for humanity.

“I warned you guys in 1984, and you didn’t listen,” he told CTV News Chief Political Correspondent Vassy Kapelos about the looming threats of AI.

Cameron was quick to add that he didn’t think AI will lead to our demise anytime soon, but said the “weaponization” of technology poses grave danger to human existence.

“You got to follow the money,” he said. “Who’s building these things? They’re either building it to dominate marketing shares, so you’re teaching it greed, or you’re building it for defensive purposes, so you’re teaching it paranoia. I think the weaponization of AI is the biggest danger.”

In a 2019 interview with Postmedia, Cameron predicted a “never-ending conflict between humans and artificial intelligence … will take place.”

“Whether that’s a smooth transition or whether that’s a rocky one or whether there’s an apocalypse, remains to be seen but I don’t think people are taking it as seriously as they should,” he said. “If you talk to any AI researcher, they all say it’s pretty inevitable that they’ll be able to develop an artificial intelligence equal to ours or even greater. And I don’t think that there’s enough adult supervision for what they’re doing. That’s speaking as a science-fiction writer, that’s speaking as a filmmaker and that’s speaking as a father of five. This is a potential existential threat.”

Meanwhile, at an event in June, Schwarzenegger said the future Cameron predicted in the movies has “become a reality.”

“Today, everyone is frightened of it, of where this is gonna go,” Schwarzenegger said about AI [per PEOPLE]. “And in this movie, in Terminator, we talk about the machines becoming self-aware and they take over… Now over the course of decades, it has become a reality. So it’s not any more fantasy or kind of futuristic. It is here today. And so this is the extraordinary writing of Jim Cameron.”

mdaniell@postmedia.com

X: @markhdaniell

people.com

people.com

ctvnews.ca

ctvnews.ca

torontosun.com

torontosun.com

Bad acting led film's director James Cameron to axe critical story point

Author of the article:Mark Daniell

Published Sep 01, 2023 • Last updated 1 day ago • 3 minute read

Terminator producer Gale Anne Hurd has finally revealed why James Cameron cut a key scene from his landmark 1984 science-fiction movie that tied more closely to the film’s future sequels.

Hurd took to X this week to share the scene in question, which showed how two employees from Cyberdyne Systems, the tech company that eventually created the dangerous AI known as Skynet, found the computer chip that powered the deadly Terminator killing machines.

The writer-producer then revealed that the James Cameron, who wrote and directed the film, cut the sequence due to bad acting.

“#TheTerminator financier John Daly’s #HemdaleFilms had an output deal with #OrionPictures but hadn’t yet made a hit (that changed with our film and #Platoon),” Hurd, who was married to Cameron for four years, wrote. “They insisted we use financier friends not actors in this scene, which ruined it for us.”

Hurd went on to add that Daly wanted his friends in the film because “the financiers were promised a return on their investment and had yet to receive one.”

“Was it the acting or the forced use of non-actors? They seem fine I guess performance wise for that brief scene,” one fan pointedly asked Hurd on X.

“Jim (thankfully) was never satisfied with ‘just OK’, even back then!” Hurd replied.

In The Terminator, Cameron cast Arnold Schwarzenegger as a cyborg assassin who is sent back in time to kill the mother of the human resistance warrior who, in the future, leads an uprising against a sentient machine guided by AI.

The deleted scene, which has racked up over 130,000 views on YouTube, hinted at how that dangerous new tech was given birth.

In the comments, many fans of the 1984 sleeper hit weighed in on the cut sequence, saying it should have stayed in the finished product.

“It explains a lot, as it shows Sarah Connor being taken to the hospital (or an asylum) and how the Cyberdyne Systems co. came into possession of the microchip that is ultimately used to give life to Terminators,” one person wrote, with another adding, “This scene is epic! Wish it was in the theatrical cut as it shows that Sarah and Kyle were the ones who made Skynet a reality. They are responsible or the nightmare they want to end.”

Earlier this summer, Cameron spoke about the rise of AI in modern times saying that he had a feeling it would eventually become a problem for humanity.

“I warned you guys in 1984, and you didn’t listen,” he told CTV News Chief Political Correspondent Vassy Kapelos about the looming threats of AI.

Cameron was quick to add that he didn’t think AI will lead to our demise anytime soon, but said the “weaponization” of technology poses grave danger to human existence.

“You got to follow the money,” he said. “Who’s building these things? They’re either building it to dominate marketing shares, so you’re teaching it greed, or you’re building it for defensive purposes, so you’re teaching it paranoia. I think the weaponization of AI is the biggest danger.”

In a 2019 interview with Postmedia, Cameron predicted a “never-ending conflict between humans and artificial intelligence … will take place.”

“Whether that’s a smooth transition or whether that’s a rocky one or whether there’s an apocalypse, remains to be seen but I don’t think people are taking it as seriously as they should,” he said. “If you talk to any AI researcher, they all say it’s pretty inevitable that they’ll be able to develop an artificial intelligence equal to ours or even greater. And I don’t think that there’s enough adult supervision for what they’re doing. That’s speaking as a science-fiction writer, that’s speaking as a filmmaker and that’s speaking as a father of five. This is a potential existential threat.”

Meanwhile, at an event in June, Schwarzenegger said the future Cameron predicted in the movies has “become a reality.”

“Today, everyone is frightened of it, of where this is gonna go,” Schwarzenegger said about AI [per PEOPLE]. “And in this movie, in Terminator, we talk about the machines becoming self-aware and they take over… Now over the course of decades, it has become a reality. So it’s not any more fantasy or kind of futuristic. It is here today. And so this is the extraordinary writing of Jim Cameron.”

mdaniell@postmedia.com

X: @markhdaniell

Arnold Schwarzenegger Says James Cameron's 'Terminator' Films Predicted the Future: 'It Has Become a Reality'

Arnold Schwarzenegger said he believes James Cameron's Terminator films predicted the future and became a 'reality' when it comes to AI. The actor shared his thoughts at 'An Evening with Arnold Schwarzenegger' in Los Angeles on Wednesday,

'I warned you guys in 1984,' 'Terminator' filmmaker James Cameron says of AI's risks to humanity

Oscar-winning Canadian filmmaker James Cameron says he agrees with experts in the artificial intelligence field that advancements in the technology pose a serious risk to humanity.

'Terminator' producer reveals why movie's original end scene was scrapped

Terminator producer Gale Anne Hurd has finally revealed why James Cameron cut a key scene from his landmark 1984 science-fiction movie.

Meet Chloe, the World's First Self-Learning Female AI Robot

Join our newsletter for weekly updates of all things AI Robotics: https://scalingwcontent.ck.page/newsletter Meet Chloe, the revolutionary new AI robot that ...

A.I.-generated model with more than 157K followers earns $15,000 a month

Author of the article enette Wilford

enette Wilford

Published Nov 28, 2023 • Last updated 1 day ago • 2 minute read

AI-generated Spanish model Aitana Lopez.

AI-generated Spanish model Aitana Lopez. PHOTO BY AITANA LOPEZ /Instagram

A sexy Spanish model with wild pink hair and hypnotic eyes is raking in $15,000 a month — and she’s not even real.

Aitana Lopez has amassed a massive fan base of 157,000 strong online, thanks to her gorgeous snaps on social media, where she poses in everything from swimsuits and lingerie to workout wear and low-cut tops.

Not bad for someone who doesn’t actually exist.

Spanish designer Ruben Cruz used artificial intelligence to help create the animated model look as real as possible — where even the most discerning eyes might miss the hashtag, #aimodel.

Cruz, founder of the agency, The Clueless, was struggling with a meagre client base due to the logistics of working with real-life influencers.

So they decided to create their own influencer to use as a model for the brands they were working with, he told EuroNews.

Aitana was who they came up with, and the virtual model can earn up to $1,500 for an ad featuring her image.

Cruz said Aitana can earn up to $15,000 a month, bringing in an average of $4,480.

“We did it so that we could make a better living and not be dependent on other people who have egos, who have manias, or who just want to make a lot of money by posing,” Cruz told the publication.

Aitana now has a team that meticulously plans her life from week to week, plots out the places she will visit, and determines which photos will be uploaded to satisfy her followers.

“In the first month, we realized that people follow lives, not images,” Cruz said. “Since she is not alive, we had to give her a bit of reality so that people could relate to her in some way. We had to tell a story.”

So aside from appearing as a fitness enthusiast, her website also describes Aitana as outgoing and caring. She’s also a Scorpio, in case you wondered.

“A lot of thought has gone into Aitana,” he added. “We created her based on what society likes most. We thought about the tastes, hobbies and niches that have been trending in recent years.”

The pink hair and gamer side of Aitana is the result.

Fans can also see more of Aitana on the subscription-based platform Fanvue, an OnlyFans rival that boasts many AI models.

Aitana is so realistic that celebrities have even slid into her DMs.

“One day, a well-known Latin American actor texted to ask her out,” Cruz revealed. “He had no idea Aitana didn’t exist.”

The designers have created a second model, Maia, following Aitana’s success.

Maia, whose name — like Aitana’s — contain the acronym for artificial intelligence – is described as “a little more shy.”

![aitana-e1701191749693[1].jpg aitana-e1701191749693[1].jpg](https://forums.canadiancontent.net/data/attachments/18/18447-46ab5c588e5571fd010fc8ff5a2cb600.jpg)

instagram.com

instagram.com

Instagram

instagram.com

instagram.com

Instagram

instagram.com

instagram.com

euronews.com

euronews.com

torontosun.com

torontosun.com

Author of the article

Published Nov 28, 2023 • Last updated 1 day ago • 2 minute read

AI-generated Spanish model Aitana Lopez.

AI-generated Spanish model Aitana Lopez. PHOTO BY AITANA LOPEZ /Instagram

A sexy Spanish model with wild pink hair and hypnotic eyes is raking in $15,000 a month — and she’s not even real.

Aitana Lopez has amassed a massive fan base of 157,000 strong online, thanks to her gorgeous snaps on social media, where she poses in everything from swimsuits and lingerie to workout wear and low-cut tops.

Not bad for someone who doesn’t actually exist.

Spanish designer Ruben Cruz used artificial intelligence to help create the animated model look as real as possible — where even the most discerning eyes might miss the hashtag, #aimodel.

Cruz, founder of the agency, The Clueless, was struggling with a meagre client base due to the logistics of working with real-life influencers.

So they decided to create their own influencer to use as a model for the brands they were working with, he told EuroNews.

Aitana was who they came up with, and the virtual model can earn up to $1,500 for an ad featuring her image.

Cruz said Aitana can earn up to $15,000 a month, bringing in an average of $4,480.

“We did it so that we could make a better living and not be dependent on other people who have egos, who have manias, or who just want to make a lot of money by posing,” Cruz told the publication.

Aitana now has a team that meticulously plans her life from week to week, plots out the places she will visit, and determines which photos will be uploaded to satisfy her followers.

“In the first month, we realized that people follow lives, not images,” Cruz said. “Since she is not alive, we had to give her a bit of reality so that people could relate to her in some way. We had to tell a story.”

So aside from appearing as a fitness enthusiast, her website also describes Aitana as outgoing and caring. She’s also a Scorpio, in case you wondered.

“A lot of thought has gone into Aitana,” he added. “We created her based on what society likes most. We thought about the tastes, hobbies and niches that have been trending in recent years.”

The pink hair and gamer side of Aitana is the result.

Fans can also see more of Aitana on the subscription-based platform Fanvue, an OnlyFans rival that boasts many AI models.

Aitana is so realistic that celebrities have even slid into her DMs.

“One day, a well-known Latin American actor texted to ask her out,” Cruz revealed. “He had no idea Aitana didn’t exist.”

The designers have created a second model, Maia, following Aitana’s success.

Maia, whose name — like Aitana’s — contain the acronym for artificial intelligence – is described as “a little more shy.”

![aitana-e1701191749693[1].jpg aitana-e1701191749693[1].jpg](https://forums.canadiancontent.net/data/attachments/18/18447-46ab5c588e5571fd010fc8ff5a2cb600.jpg)

Aitana Lopez✨| Virtual Soul on Instagram: "Rumbo a Madrid! ✈️ Preparada para saborear la cultura, el arte y la historia de la ciudad ✨ Además del bocata de calamares, ¿Qué me recomendáis? 💖😊"

21K likes, 660 comments - fit_aitana on November 8, 2023: "Rumbo a Madrid! ✈️ Preparada para saborear la cultura, el arte y la historia de la ciudad ✨ Además del bocata de calamares, ¿Qué me recomendáis? 💖😊".

instagram.com

instagram.com

instagram.com

instagram.com

instagram.com

instagram.com

This Spanish AI model earns up to €10,000 a month

Aitana, 25, a pink-haired woman from Barcelona, receives weekly private messages from celebrities asking her out. But this model is not real.

A.I.-generated model with more than 157K followers earns $15,000 a month

A sexy Spanish model sigh wild pink hair and hypnotic eyes is raking in $15,000 a month – and she’s not even real.